Towards Expressive Graph Neural Networks Beyond Message-Passing

In this project, we develop expressive neural network architectures for learning graphs.

Expressive Pure Graph Transformers without Message-Passing

Graph Inductive Biases in Transformers without Message Passing (ICML 2023)

SOTA in 2023! Still Competitive Now!

TL;DR

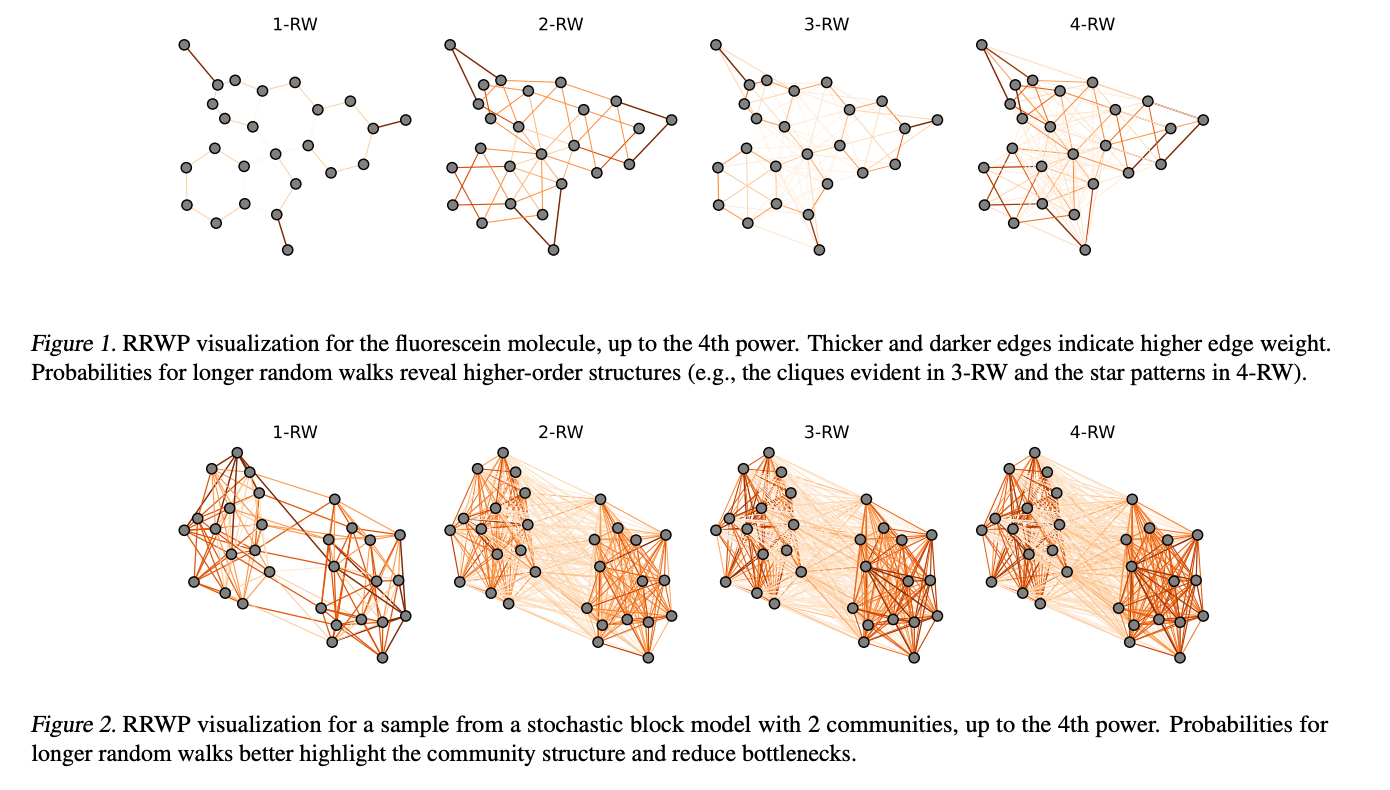

- Propose a novel graph positional encoding scheme: Relative Random Walk Probabilities (RRWP)

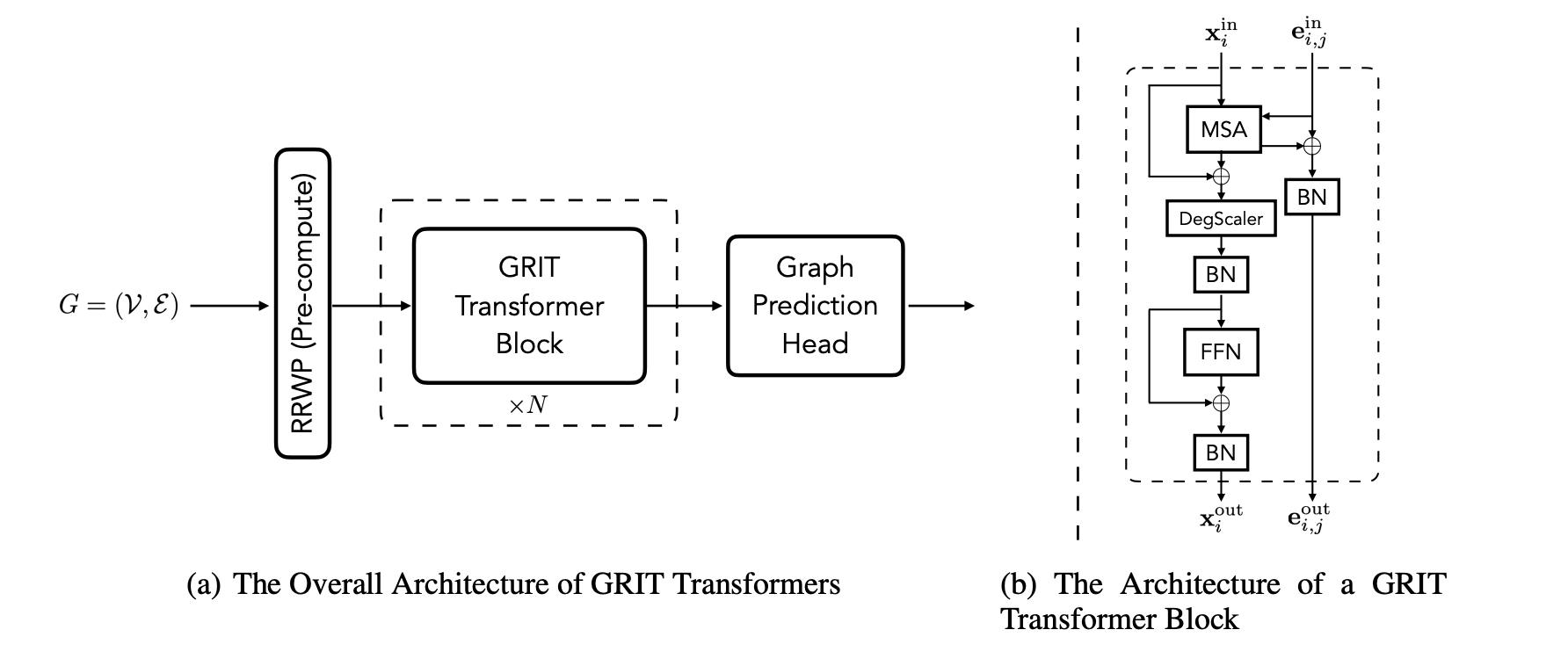

- Propose graph transformers architecture without MPNNs. (Pure Transformer! Not just a painkiller for MPNNs!)

- Beat all previous graph transformers with/without MPNNs.

Graph Convolution on Peusdo-coordinates

CKGConv: General Graph Convolution with Continuous Kernels (ICML 2024)