HardCore Generation: Generating Hard UNSAT Problems for Data Augmentation

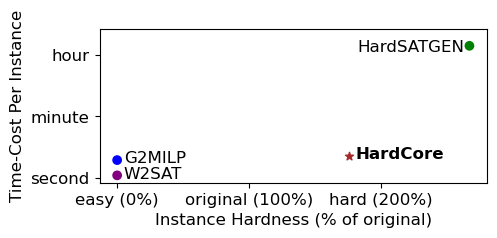

Efficiently determining the satisfiability of a boolean equation -- known as the SAT problem for brevity -- is crucial in various industrial problems. Recently, the advent of deep learning methods has introduced significant potential for enhancing SAT solving. However, a major barrier to the advancement of this field has been the scarcity of large, realistic datasets. The majority of current public datasets are either randomly generated or extremely limited, containing only a few examples from unrelated problem families. These datasets are inadequate for meaningful training of deep learning methods. In light of this, researchers have started exploring generative techniques to create data that more accurately reflect SAT problems encountered in practical situations. These methods have so far suffered from either the inability to produce challenging SAT problems or time-scalability obstacles. In this paper we address both by identifying and manipulating the key contributors to a problem's ``hardness'', known as cores. Although some previous work has addressed cores, the time costs are unacceptably high due to the expense of traditional heuristic core detection techniques. We introduce a fast core detection procedure that uses a graph neural network. Our empirical results demonstrate that we can efficiently generate problems that remain hard to solve and retain key attributes of the original example problems. We show via experiment that the generated synthetic SAT problems can be used in a data augmentation setting to provide improved prediction of solver runtimes.

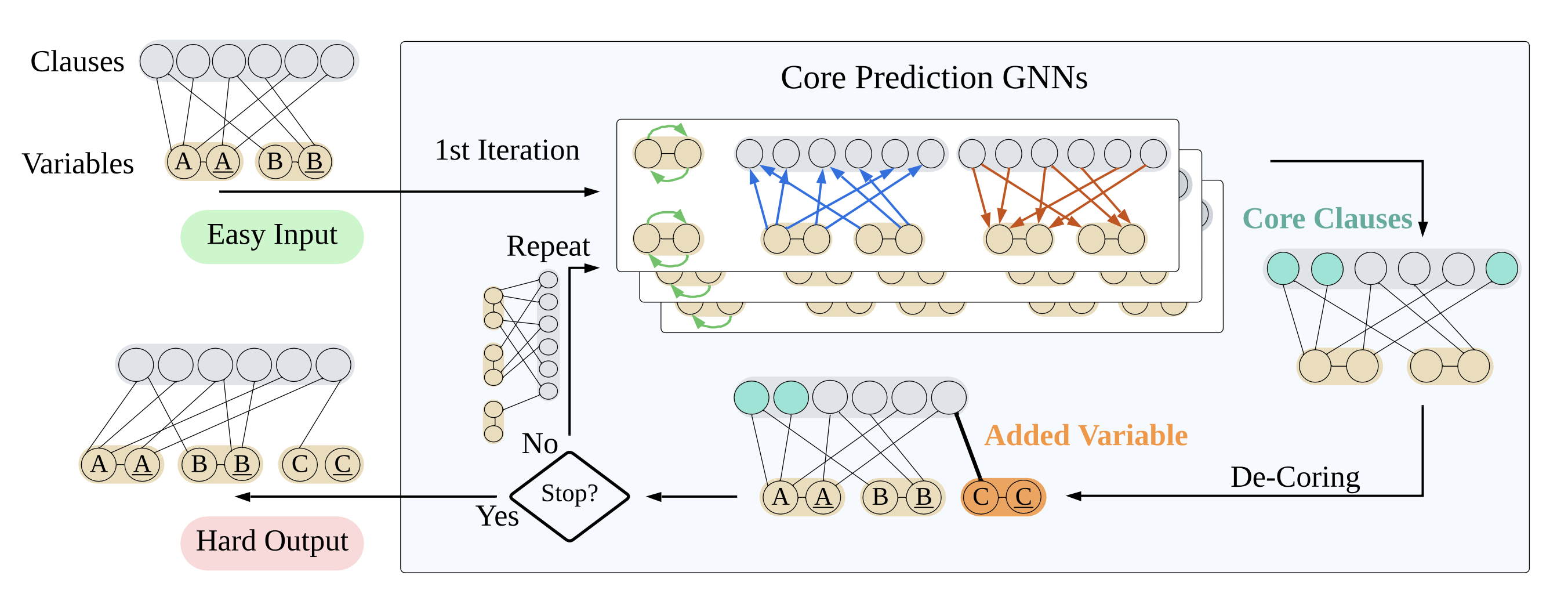

Our method begins by producing generated problems using a low-cost random generator. This generation is likely to not be hard due to the notion of "hardness collapse", in which trivially easy-to-detect conflicts are introduced during generation. To address this, we iteratively detect these conflicts using our low-cost learned GNN approximation of very expensive core detection algorithms, we break the conflict, and repeat iteratively.

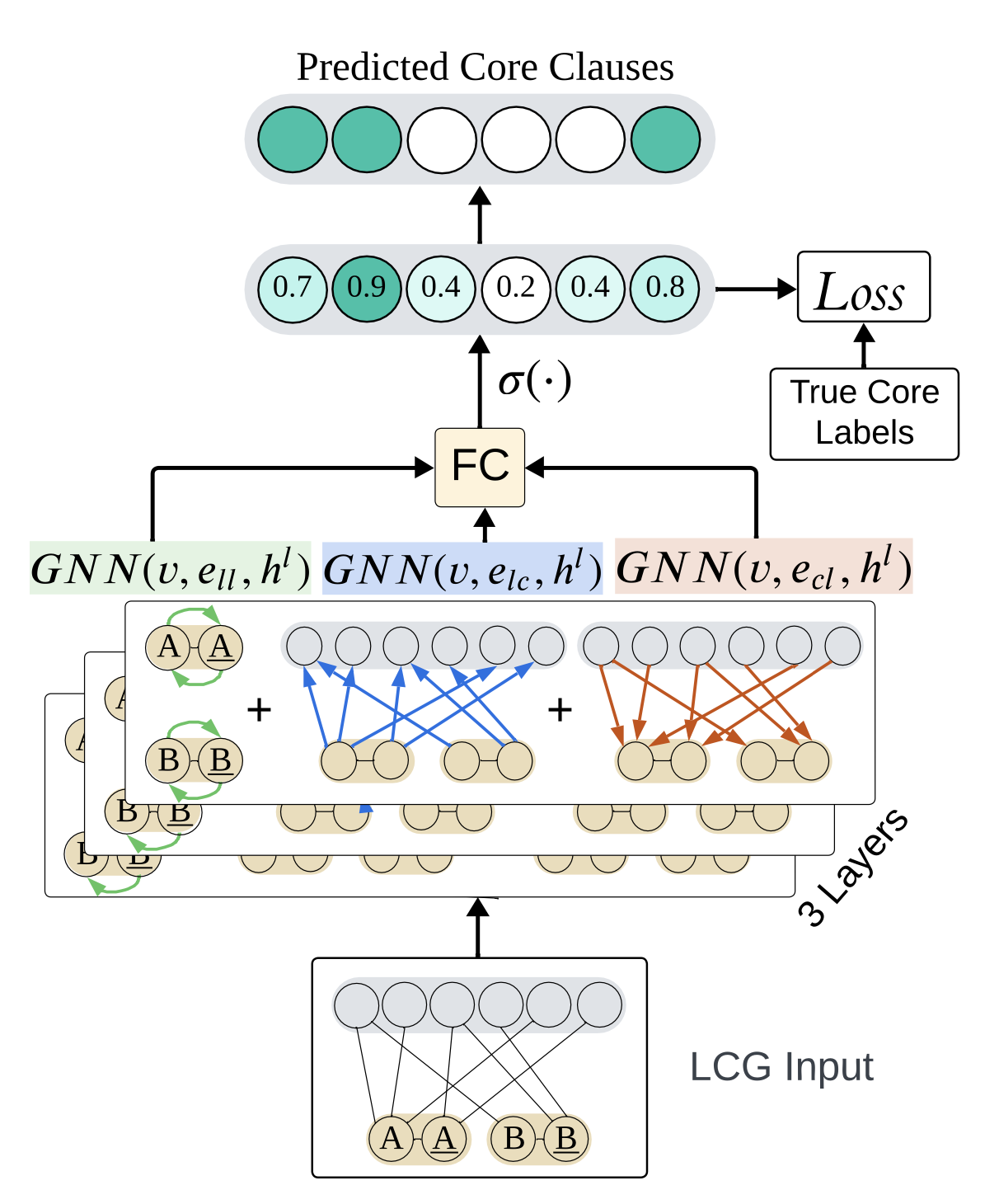

Our GNN Core prediction architecture is designed to operate on the (augmented) bi-partite graph representation (LCG) of SAT problems. At each layer we compute separate node embeddings according to the literal nodes' inverse-polarity counterpart, according to the literal nodes' adjacent clause nodes, and according to the clause nodes' literal adjacencies.

Team

Citation

This project was accepted in NeurIPS 2024.

@inproceedings{HardCore,

title = {Diffusing Gaussian Mixtures for Generating Categorical Data},

author={Joseph Cotnareanu and Zhanguang Zhang and Hui-Ling Zhen and Yingxue Zhang and Mark Coates},

booktitle = {Proc. Adv. Neural Inf. Process. Syst.},

year = {2024}

}