Multi-resolution Time-Series Forecasting

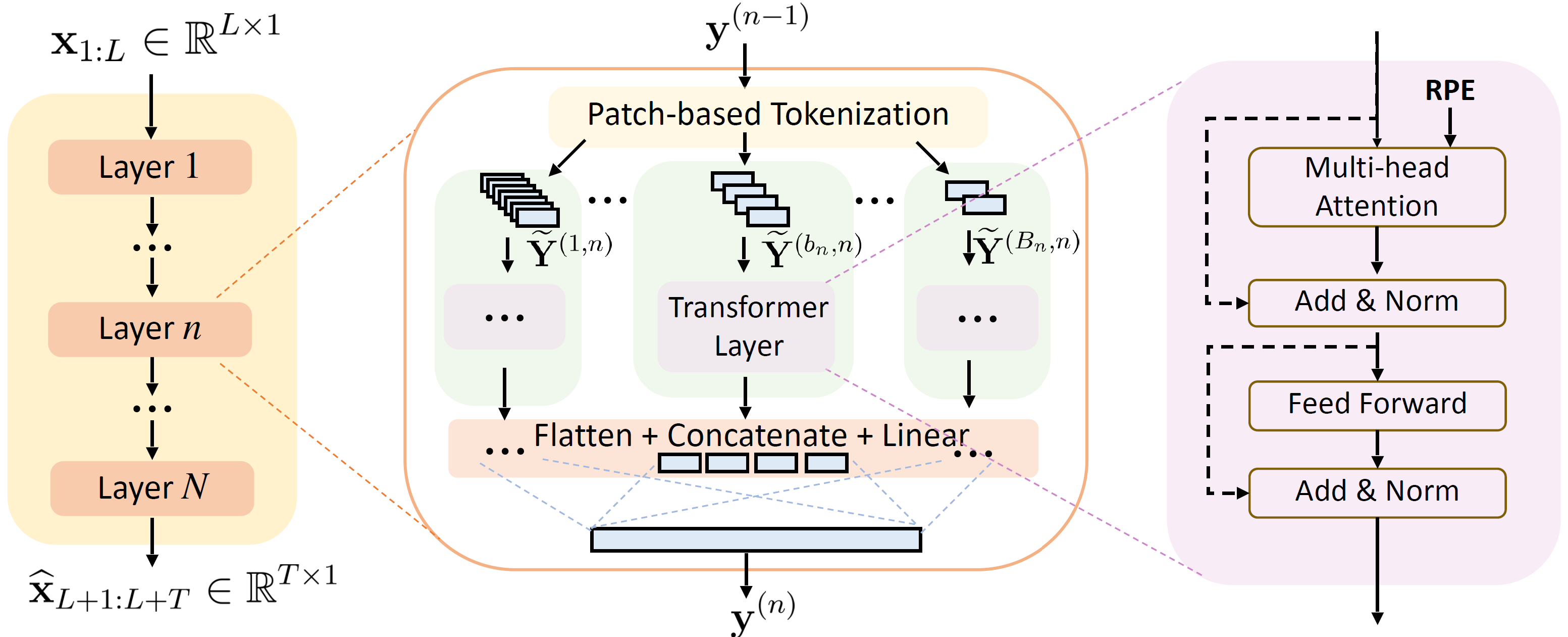

In this project we propose a novel framework, Multi-resolution Time-Series Transformer (MTST), which consists of a multi-branch architecture for simultaneous modeling of diverse temporal patterns at different resolutions.

Contribution

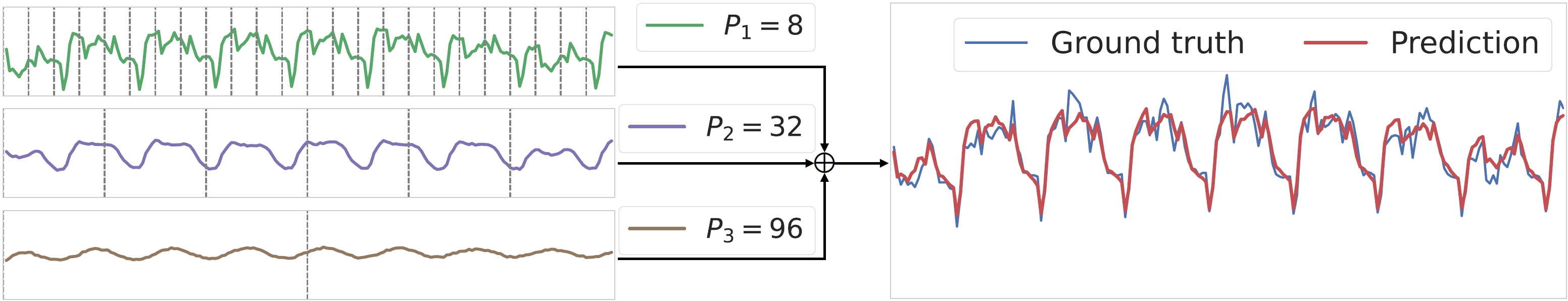

The performance of transformers for time-series forecasting has improved significantly. Recent architectures learn complex temporal patterns by segmenting a time-series into patches and using the patches as tokens. The patch size controls the ability of transformers to learn the temporal patterns at different frequencies: shorter patches are effective for learning localized, high-frequency patterns, whereas mining long-term seasonalities and trends requires longer patches.

Inspired by this observation, we propose Multi-resolution Time-Series Transformer (MTST). By constructing multiple sets of tokens with different patch-sizes, each MTST layer can model the temporal patterns of different frequencies simultaneously with multi-branch self-attentions.

In contrast to many existing time-series transformers, we employ relative positional encoding, which is better suited for extracting periodic components at different scales. Extensive experiments on several real-world datasets demonstrate the effectiveness of MTST in comparison to state-of-the-art forecasting techniques.

Team

Citation

This project was published in Artificial Intelligence and Statistics (AISTATS) 2024.

@inproceedings{zhang2024,

title = {Multi-resolution Time-Series Transformer for Long-term Forecasting},

author = {Zhang, Yitian and Ma, Liheng and Pal, Soumyasundar and Zhang, Yingxue and Coates, Mark},

booktitle = {Int. Conf. Artif. Intell. Stat.},

year = {2024}

}